A Structural Model for Evaluating Science-Based Ventures

- Arise Innovations

- 6 days ago

- 8 min read

Why evaluation fails in science ventures

Why intuition, pitch quality, and capital signals dominate decisions

In the absence of a shared structural model, evaluation defaults to what is legible. Reviewers rely on intuition, founders are assessed on clarity and confidence, and capital raised becomes a shorthand for progress. These signals are not irrational. They are efficient proxies in environments where feedback is fast, outcomes are reversible, and execution risk dominates.

Science ventures do not meet those conditions.

Evidence matures slowly, constraints emerge late, and many decisive risks are unobservable at the moment decisions are made. Yet the evaluation apparatus remains optimized for speed, comparability, and narrative coherence, not for latent uncertainty. As a result, what gets rewarded is not readiness, but plausibility.

The cost of narrative driven evaluation in scientific contexts

Narratives simplify complexity by necessity. In science based ventures, this simplification is costly. When stories substitute for structure, evaluation shifts from interrogating system behavior to validating consistency. Technical unknowns are reframed as “to be solved with funding”, industrial constraints are postponed, and market assumptions harden long before they can be tested. The stronger the narrative, the more pressure it creates to move forward, even when foundational questions remain open. Over time, this produces a form of collective self reinforcement where decisions appear reasonable in isolation but become destructive in sequence. What looks like momentum is often just the accumulation of commitments under unresolved uncertainty.

False positives, false negatives, and why both are structural, not accidental

From the outside, failures in science ventures are often explained as bad bets or execution mistakes. In reality, the dominant errors are selection errors embedded in the evaluation logic itself. False positives emerge when ventures with compelling narratives and early capital signals are advanced despite unresolved structural mismatches between evidence, scale, and capital requirements. False negatives occur when technically strong ventures are filtered out because they lack polish, speed, or fundability at the wrong moment. Both outcomes arise from the same root cause: evaluation frameworks that are blind to timing, sequencing, and system constraints. These are not edge cases or random noise. They are predictable artifacts of evaluating scientific systems with tools designed for a different class of innovation.

Science ventures are systems, not projects

Why science based ventures behave differently from software or services

Science based ventures are governed by physical, biological, or chemical reality rather than by user behavior alone. Progress is constrained by experiments, material limits, regulatory exposure, and scale effects that cannot be abstracted away. Unlike software or service businesses, iteration is expensive, slow, and often irreversible. Feedback does not arrive in days or weeks but in months or years, and success at small scale says little about viability at the next one. Treating these ventures as projects with linear milestones ignores the fact that their core risk is not execution speed but structural coherence across domains.

Non-linearity, irreversibility, and path dependence

In science ventures, effects are rarely proportional to inputs. A small change in evidence can invalidate an entire scale up strategy, while large capital injections may produce no meaningful reduction in uncertainty. Many decisions create irreversible commitments: manufacturing choices, regulatory pathways, IP structures, and early capital terms cannot be easily undone without destroying time, credibility, or optionality. As these commitments accumulate, the venture becomes path dependent. Early choices constrain future options, not because they were wrong in isolation, but because they locked the system into a trajectory that no longer fits the evolving evidence.

Evaluation as system state assessment, not snapshot scoring

Because science ventures evolve as coupled systems, evaluation cannot be reduced to a score or a momentary judgment. What matters is not how impressive a venture looks at a given point, but how its components interact under current and future constraints. A meaningful evaluation asks whether evidence, industrialization, market assumptions, and capital structure are aligned at this moment in time, and whether the next decision expands or collapses optionality. This shifts evaluation from ranking ventures against each other to diagnosing system state. The output is not a winner or loser, but a clear understanding of what moves are structurally permissible next, and which ones would silently amplify failure.

The four dimensions that actually govern science ventures

Most evaluation frameworks treat science ventures as a bundle of attributes: strong technology, large market, experienced team, sufficient funding. Each element is assessed separately, scored, and aggregated into a decision. This approach creates an illusion of rigor while missing the core problem. Science ventures are governed by a small number of fundamental dimensions whose interaction determines whether progress is possible at all. Understanding these dimensions, and how they constrain each other over time, is the difference between evaluating potential and evaluating reality.

Taken together, these four dimensions define the actual operating space of a science venture at any given moment. Strength in one cannot compensate for misalignment in another, and progress in one domain can actively damage the system if it forces premature movement elsewhere. Evaluation that ignores this interdependence systematically misclassifies ventures, rewarding coherence of story over coherence of structure. A structural model does not ask whether a venture looks promising. It asks whether its next step is physically, economically, and temporally possible.

Development stage is not readiness

Why TRLs describe position but not viability

Technology Readiness Levels are often treated as a proxy for investability or maturity. In reality, TRLs only describe where a technology sits relative to a predefined development ladder. They say nothing about whether the surrounding conditions required for progress actually exist. Two ventures at the same TRL can face radically different futures depending on evidence stability, scale constraints, regulatory exposure, and capital pressure. TRLs locate a technology in space. They do not describe whether movement from that position is feasible.

The difference between technical progress and structural readiness

Technical progress measures whether something works better than before. Structural readiness measures whether the system around it can absorb the next step. A prototype can improve while becoming harder to scale. Performance gains can introduce new manufacturing constraints. Additional features can trigger regulatory reclassification. From a technical perspective, the venture is advancing. From a structural perspective, optionality may be collapsing. Readiness exists only when technical progress and structural conditions move in the same direction.

How ventures stall despite advancing stages

Many science ventures do not fail at a single moment. They stall. They continue to advance through stages, secure pilots, and raise incremental funding, yet never cross the threshold into sustainable deployment. The stall occurs when accumulated commitments outpace uncertainty reduction. The venture reaches a point where the next required step demands resources, partners, or timelines that the system can no longer support. From the outside, this looks like bad luck or market timing. Structurally, it is the predictable outcome of treating stage advancement as progress while ignoring readiness.

Uncertainty is not risk and must be treated differently

Known risks vs unpriceable uncertainty

Risk assumes that outcomes and probabilities can be reasonably estimated. Uncertainty in science ventures violates that assumption. At early and mid stages, many of the most consequential unknowns cannot yet be measured, priced, or modeled. Mechanisms may be incomplete, scale effects unobserved, regulatory interpretation unresolved. Treating these unknowns as risks to be discounted creates a false sense of control. Structural evaluation instead asks which uncertainties are already observable, which are not yet accessible, and which decisions would prematurely force them into existence.

Why early financial models fail in science ventures

Financial models depend on stable assumptions about cost curves, timelines, and revenue formation. In science ventures, these parameters are not just uncertain, they are undefined. Early models often harden guesses into forecasts, then use those forecasts to justify capital commitments that compress time and distort priorities. When the underlying science evolves, the model becomes an anchor rather than a guide. Failure then appears as deviation from plan, rather than as evidence that the plan was never structurally grounded.

How uncertainty migrates across domains over time

Uncertainty in science ventures does not disappear, it moves. Early scientific uncertainty may later reappear as manufacturing yield issues, regulatory delays, or market resistance. If not addressed in the domain where it originates, it resurfaces downstream where it is far more expensive to resolve. Structural evaluation tracks this migration explicitly, asking whether uncertainty is being reduced or merely displaced by each decision.

Capital as an active force, not neutral input

How funding reshapes incentives, timelines, and decision order

The moment capital enters a venture, it changes behavior. Milestones are pulled forward, narratives solidify, hiring accelerates, and external expectations form. Capital determines which questions must be answered next and which ones are postponed. This is not a side effect, it is the primary effect. Evaluating capital as a passive enabler ignores how strongly it shapes sequencing and decision order.

When capital reduces uncertainty and when it amplifies failure

Capital reduces uncertainty when it is applied to questions that are already structurally accessible: controlled experiments, defined scale tests, or bounded pilots. It amplifies failure when it forces commitments before those questions can be answered. In those cases, more money increases burn, complexity, and rigidity without increasing knowledge. The result is faster movement toward a dead end, not faster learning.

Common capital induced collapse patterns

Recurring patterns include early equity that locks in unrealistic timelines, large rounds raised on unstable evidence, and strategic capital that narrows market options too soon. These failures are rarely sudden. They unfold as a sequence of reasonable decisions made under increasing pressure, until reversal becomes impossible. Structural evaluation is designed to detect these patterns before they fully materialize.

Structural evaluation as a decision tool

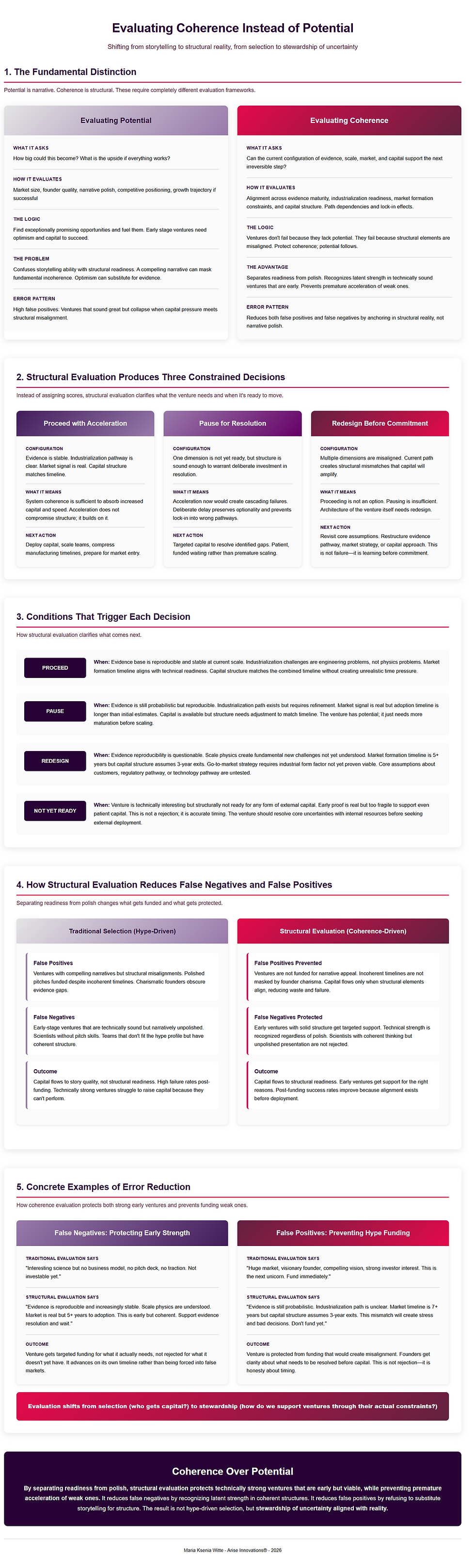

What it means to evaluate coherence instead of potential

Potential is narrative. Coherence is structural. Evaluating coherence means asking whether the current configuration of evidence, scale, market, and capital can support the next irreversible step. The question is not how big the opportunity could become, but whether the system can move forward without collapsing optionality.

How structural evaluation reduces false negatives without inflating hype

By separating readiness from polish, structural evaluation protects technically strong ventures that are early but viable, while preventing premature acceleration of weak ones. It reduces false negatives by recognizing latent strength, and reduces false positives by refusing to substitute storytelling for structure.

Who this model is for and how it changes decisions

Implications for investors, venture builders, and institutions

For investors, the model replaces pattern matching with state assessment. For venture builders, it informs sequencing and intervention rather than speed. For institutions, it provides a way to justify delay, redesign programs, and allocate capital without relying on cosmetic progress signals. Across all roles, it shifts decisions from reactive to deliberate.

Why better evaluation improves both outcomes and capital efficiency

When capital is deployed in alignment with structural readiness, less is wasted on accelerating failure. Fewer ventures are pushed into premature scale, and more survive long enough to resolve their core uncertainties. The result is not slower innovation, but fewer dead ends and higher return on invested effort and capital.

If evaluation is about understanding system state, the next question is action.

How should interventions be sequenced once the structure is visible? When should capital, partnerships, or governance change be introduced, and in what order?

This article draws on the Deep Tech Playbook (2nd Edition). The playbook formalizes how scientific risk, capital sequencing, timelines, and institutional constraints interact across the venture lifecycle. It is designed for investors, policymakers, venture builders, and institutions working with science-based companies.

About the Author

Maria Ksenia Witte is a science commercialization strategist and the inventor of the 4x4-TETRA Deep Tech Matrix™, world's first RD&I-certified operating system for evaluating and building science ventures. She works with investors, institutions, and venture builders to align decision-making frameworks, capital deployment, and evaluation models with the realities of science-driven innovation.

Copyright and Reuse

This article may be quoted, shared, and referenced for educational, research, and policy purposes, provided that proper attribution is given and the original source is clearly cited. Any commercial use, modification, or republication beyond short excerpts requires prior written permission.

Join the Conversation

If this article resonated, consider sharing it with investors, policymakers, and venture builders shaping science-based innovation. Follow this blog for future essays exploring how science ventures can be evaluated, funded, and built on their own terms.

Stay Connected

Subscribe to the newsletter for deeper analysis, case studies, and frameworks focused on science innovation, institutional decision-making, and long-term value creation.

Comments